DAQA - Extended analysis#

This analysis will answer the following questions…

For the whole data set:

what % of projects have addresses?

what % of projects have completion dates?

what % of projects have associated firms but no architects?

what % of projects have associated architects but no firms?

what % of firms have ‘operating years’ recorded

Number of projects:

Before 1940

Between 1940-1980

Post 1980

Undated

For the 40-80 data set:

what % of projects have addresses?

what % of projects have completion dates?

what % of projects have associated firms but no architects?

what % of projects have associated architects but no firms?

what % of firms have ‘operating years’ recorded

Analytic questions:

Which architects were associated with Queensland governement projects i.e., Brisbane City Council, Department of Works, etc.?

Which architects have registrations recorded in DAQA between 1940-1980?

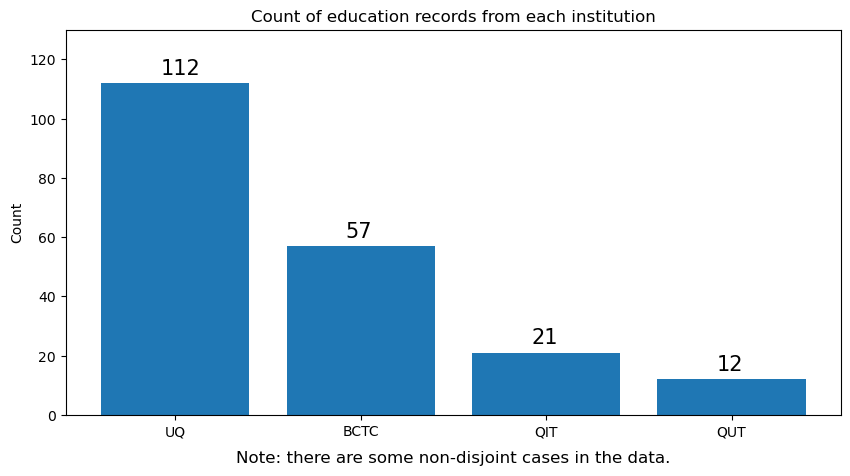

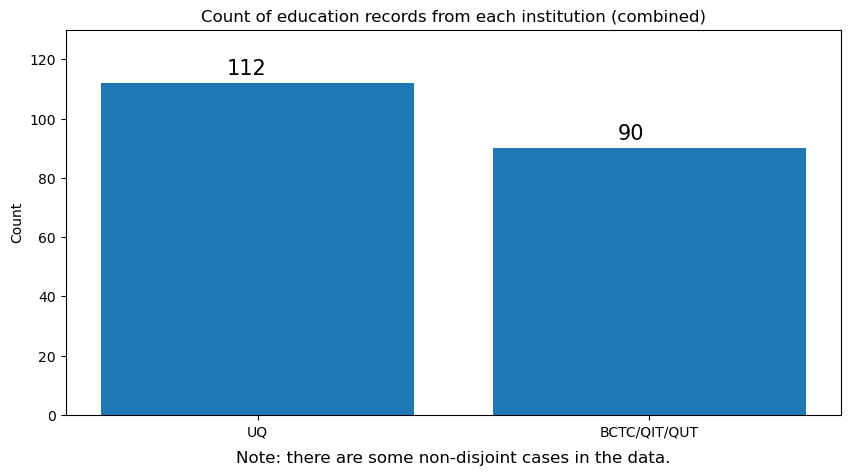

UQ vs (BCTC, QIT, QUT) vs the rest for whole data, 1940-1980, and also 1940 to present.

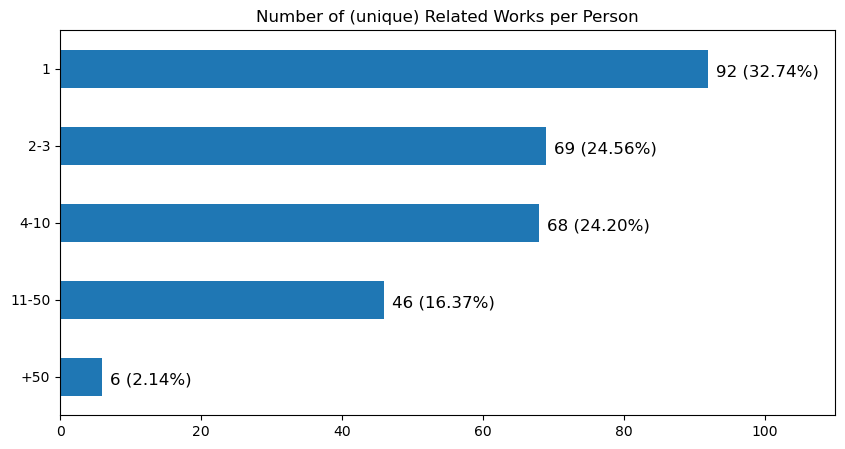

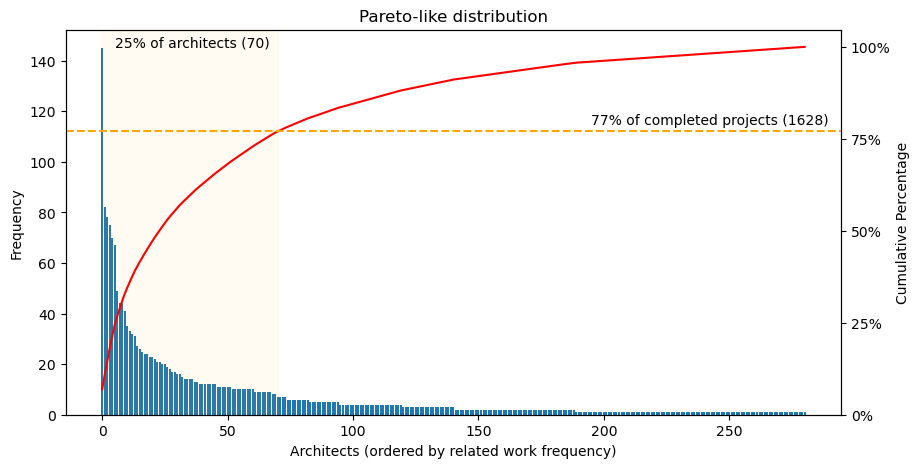

Repeat ‘how many completed related to a person’. 1940-1980 and the Pareto distribution

Number completed projects 1940-80

Number of works by year, most active vs rest 1940-1980

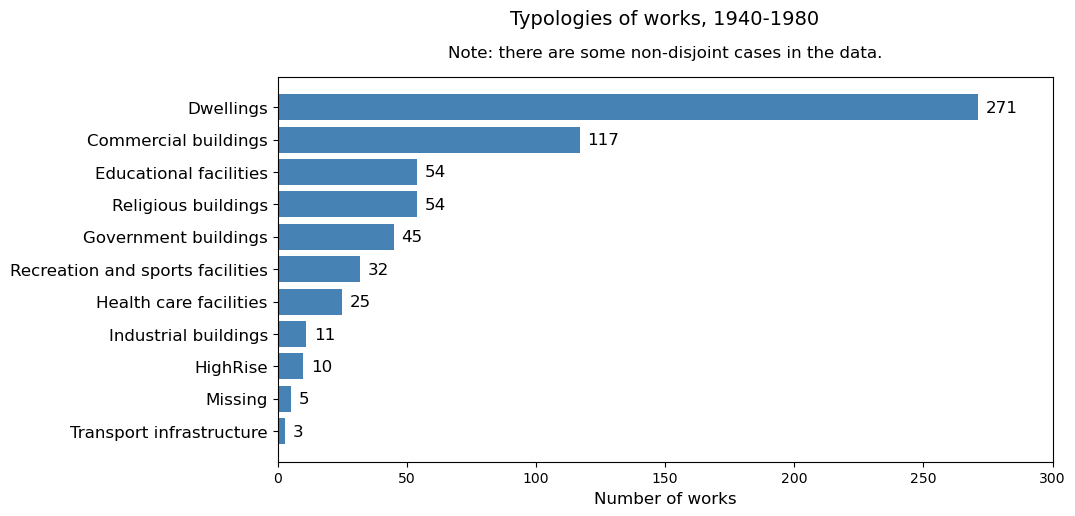

what % of the different typologies 1940-1980

Number of works by year by typology

what % of projects extant/demolished/modified 1940-1980

average and mean number of employers of the DAQA interviewed architects

names of Architects associated with the highest number of projects 1940-1980

names of firms associated with the highest number of projects 1940-1980

names of top 5 Architects associated with the highest number of each typology 1940-1980

names of top 5 firms associated with the highest number of projects 1940-1980

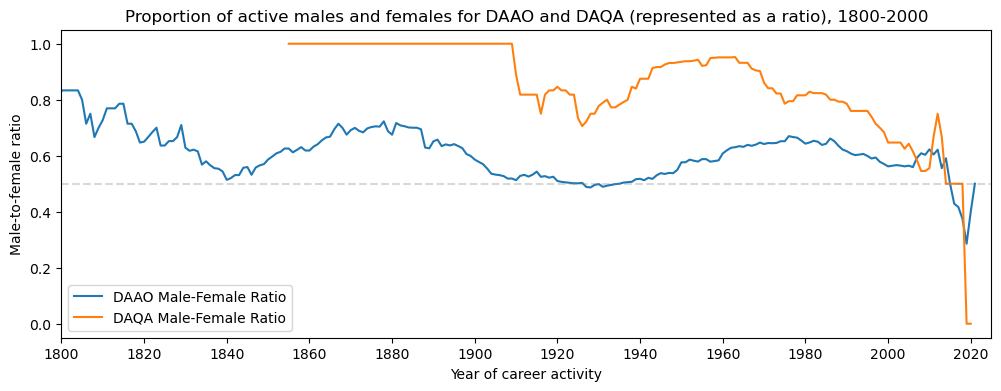

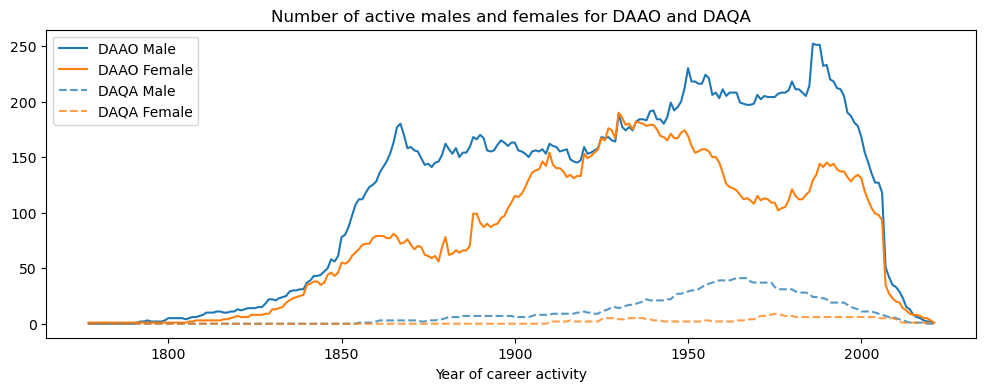

what % of architects who are women associated with projects 1940-1980

what % of architects who are women associated with projects after 1980

5 Firms with the longest timespan with the same name.

5 Firms with the longest timespan with successive names/known predecessor firms.

Show code cell source

import requests, gzip, io, os, json

# for data mgmt

import pandas as pd

import numpy as np

from collections import Counter

from datetime import datetime

import ast

# for plotting

import matplotlib.pyplot as plt

from matplotlib.ticker import PercentFormatter

import plotly.graph_objects as go

import seaborn as sns

from matplotlib.colors import to_rgba

import plotly.express as px

# for hypothesis testing

from scipy.stats import chi2_contingency

from scipy.stats import pareto

import warnings

warnings.filterwarnings("ignore")

# provide folder_name which contains uncompressed data i.e., csv and jsonl files

# only need to change this if you have already downloaded data

# otherwise data will be fetched from google drive

global folder_name

folder_name = 'data/local'

def fetch_small_data_from_github(fname):

url = f"https://raw.githubusercontent.com/acd-engine/jupyterbook/master/data/analysis/{fname}"

response = requests.get(url)

rawdata = response.content.decode('utf-8')

return pd.read_csv(io.StringIO(rawdata))

def fetch_date_suffix():

url = f"https://raw.githubusercontent.com/acd-engine/jupyterbook/master/data/analysis/date_suffix"

response = requests.get(url)

rawdata = response.content.decode('utf-8')

try: return rawdata[:12]

except: return None

def check_if_csv_exists_in_folder(filename):

try: return pd.read_csv(os.path.join(folder_name, filename), low_memory=False)

except: return None

def fetch_data(filetype='csv', acdedata='organization'):

filename = f'acde_{acdedata}_{fetch_date_suffix()}.{filetype}'

# first check if the data exists in current directory

data_from_path = check_if_csv_exists_in_folder(filename)

if data_from_path is not None: return data_from_path

urls = fetch_small_data_from_github('acde_data_gdrive_urls.csv')

sharelink = urls[urls.data == acdedata][filetype].values[0]

url = f'https://drive.google.com/u/0/uc?id={sharelink}&export=download&confirm=yes'

response = requests.get(url)

decompressed_data = gzip.decompress(response.content)

decompressed_buffer = io.StringIO(decompressed_data.decode('utf-8'))

try:

if filetype == 'csv': df = pd.read_csv(decompressed_buffer, low_memory=False)

else: df = [json.loads(jl) for jl in pd.read_json(decompressed_buffer, lines=True, orient='records')[0]]

return pd.DataFrame(df)

except: return None

def fetch_all_DAQA_data():

daqa_data_dict = dict()

for entity in ['event', 'organization', 'person', 'place', 'recognition', 'resource', 'work']:

daqa_this_entity = fetch_data(acdedata=entity)

daqa_data_dict[entity] = daqa_this_entity[daqa_this_entity.data_source.str.contains('DAQA')]

return daqa_data_dict

df_daqa_dict = fetch_all_DAQA_data() # 1 min if data is already downloaded

daqa_work = df_daqa_dict['work']

daqa_persons = df_daqa_dict['person']

daqa_orgs = df_daqa_dict['organization']

daqa_resources = df_daqa_dict['resource']

High-level summary of DAQA entities#

Before we jump into the analysis in response to the questions above, let’s take a look at a high-level of each DAQA entity i.e., person, organisation, work, resource.

Each entity has a class and we provide the count for each class for a given entity along with the total count of all classes. Next we output a count of all relationships between entities, and to this end, we generate detailled counts for all class-level relationships for each entity.

Show code cell source

# architects

print('Total number of persons:', daqa_persons.shape[0])

architect_count = daqa_persons['longterm_roles'].value_counts().reset_index()

architect_count['Proportion'] = round(architect_count['longterm_roles']/architect_count['longterm_roles'].sum(),3)

architect_count['Type'] = np.where(architect_count['index'].str.contains('non-architect'), 'non-architect', 'architect')

display(architect_count\

.groupby('Type')\

.sum()\

.reset_index()\

.rename(columns={'longterm_roles':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

# firms

print('\nTotal number of organisations:', daqa_orgs.shape[0])

firm_count = daqa_orgs['_class_ori'].value_counts().reset_index()

firm_count['Proportion'] = round(firm_count['_class_ori']/firm_count['_class_ori'].sum(),3)

display(firm_count\

.groupby('index')\

.sum()\

.reset_index()\

.rename(columns={'index':'Type', '_class_ori':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

# projects

print('\nTotal number of works i.e, projects:', daqa_work.shape[0])

project_count = daqa_work['_class_ori'].value_counts().reset_index()

project_count['Proportion'] = round(project_count['_class_ori']/project_count['_class_ori'].sum(),3)

display(project_count\

.groupby('index')\

.sum()\

.reset_index()\

.rename(columns={'index':'Type', '_class_ori':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

# articles

print('\nTotal number of resources i.e., articles, interviews:', daqa_resources.shape[0])

article_count = daqa_resources['_class_ori'].value_counts().reset_index()

article_count['Proportion'] = round(article_count['_class_ori']/article_count['_class_ori'].sum(),3)

display(article_count\

.groupby('index')\

.sum()\

.reset_index()\

.rename(columns={'index':'Type', '_class_ori':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

Total number of persons: 1103

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| architect | 912 | 0.827 |

| non-architect | 191 | 0.173 |

Total number of organisations: 967

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| "firm" | 907 | 0.938 |

| "education" | 39 | 0.040 |

| "organisation" | 15 | 0.016 |

| "government" | 6 | 0.006 |

Total number of works i.e, projects: 2203

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| "structure" | 2203 | 1.0 |

Total number of resources i.e., articles, interviews: 7696

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| "Photograph" | 3784 | 0.492 |

| "LineDrawing" | 1102 | 0.143 |

| "article" | 783 | 0.102 |

| "Image" | 742 | 0.096 |

| "Article" | 686 | 0.089 |

| "Audio" | 142 | 0.018 |

| "Transcript" | 128 | 0.017 |

| "Portrait" | 102 | 0.013 |

| "interview" | 92 | 0.012 |

| "Youtube" | 46 | 0.006 |

| "publication" | 46 | 0.006 |

| "Video" | 40 | 0.005 |

| "Spreadsheet" | 3 | 0.000 |

Show code cell source

relationship_cols = daqa_persons.iloc[:, 62:].columns

relevant_datasets = df_daqa_dict.keys()

relations = []

for this_df in relevant_datasets:

for idx,row in df_daqa_dict[this_df].iterrows():

for col in relationship_cols:

try:

if isinstance(row[col], str): relations.append(pd.json_normalize(ast.literal_eval(row[col])))

except: continue

relations = pd.concat(relations)

relations = relations.drop_duplicates()

# replace person with specfic role

# create dictionary of architects and their ids

arch_nonarch_dict = daqa_persons[['ori_id','longterm_roles']]

arch_nonarch_dict['_class_ori'] = np.where(arch_nonarch_dict['longterm_roles'].str.contains('non-architect'), 'non-architect', 'architect')

arch_nonarch_dict = arch_nonarch_dict.drop('longterm_roles', axis=1).set_index('ori_id').to_dict()['_class_ori']

relations['subject.ori_id'] = relations['subject.ori_id'].astype(str)

relations['object.ori_id'] = relations['object.ori_id'].astype(str)

relations['subject._class_ori'] = np.where(relations['subject._class'] == 'person',

relations['subject.ori_id'].map(arch_nonarch_dict),

relations['subject._class_ori'])

relations['object._class_ori'] = np.where(relations['object._class'] == 'person',

relations['object.ori_id'].map(arch_nonarch_dict),

relations['object._class_ori'])

# relations

print('Total number of relationships:', relations.shape[0])

display(relations.relation_class.value_counts()\

.reset_index()\

.rename(columns={'index':'relation_class','relation_class':'Frequency'})\

.assign(Proportion = lambda x: round(x['Frequency']/x['Frequency'].sum(),3))\

.set_index('relation_class')

.sort_values('Frequency', ascending=False))

# predicate terms

print('\nTotal number of unique predicates', relations['predicate.term'].nunique())

display(relations['predicate.term'].value_counts()\

.reset_index()\

.rename(columns={'index':'predicate.term','predicate.term':'Frequency'})\

.assign(Proportion = lambda x: round(x['Frequency']/x['Frequency'].sum(),3))\

.set_index('predicate.term')

.sort_values('Frequency', ascending=False))

Total number of relationships: 17451

| Frequency | Proportion | |

|---|---|---|

| relation_class | ||

| Work_RelatedResource | 4889 | 0.280 |

| Person_RelatedWork | 2313 | 0.133 |

| Person_RelatedPerson | 1952 | 0.112 |

| Work_RelatedPlace | 1843 | 0.106 |

| Person_RelatedOrganization | 1568 | 0.090 |

| Organization_RelatedWork | 1484 | 0.085 |

| Resource_RelatedResource | 924 | 0.053 |

| Person_RelatedResource | 671 | 0.038 |

| Resource_RelatedPerson | 610 | 0.035 |

| Organization_RelatedOrganization | 420 | 0.024 |

| Resource_RelatedOrganization | 195 | 0.011 |

| Work_RelatedOrganization | 177 | 0.010 |

| Resource_RelatedWork | 90 | 0.005 |

| Organization_RelatedResource | 83 | 0.005 |

| Resource_RelatedPlace | 77 | 0.004 |

| Person_RelatedRecognition | 60 | 0.003 |

| Resource_RelatedRecognition | 48 | 0.003 |

| Work_RelatedPerson | 31 | 0.002 |

| Organization_RelatedPerson | 7 | 0.000 |

| Person_RelatedPlace | 7 | 0.000 |

| Recognition_RelatedOrganization | 1 | 0.000 |

| Work_RelatedRecognition | 1 | 0.000 |

Total number of unique predicates 33

| Frequency | Proportion | |

|---|---|---|

| predicate.term | ||

| HasMedia | 6300 | 0.361 |

| WorkedOn | 3502 | 0.201 |

| LocatedIn | 1843 | 0.106 |

| Employment | 1289 | 0.074 |

| Reference | 1188 | 0.068 |

| RelatedTo | 636 | 0.036 |

| WorkedWith | 402 | 0.023 |

| TaughtBy | 357 | 0.020 |

| InfluencedBy | 244 | 0.014 |

| StudiedWith | 243 | 0.014 |

| PrecededBy | 221 | 0.013 |

| KnewSocially | 203 | 0.012 |

| succeededby | 189 | 0.011 |

| KnewProfessionally | 132 | 0.008 |

| StudiedAt | 118 | 0.007 |

| IsInvolvedIn | 97 | 0.006 |

| PartnerOf | 83 | 0.005 |

| DoneIn | 77 | 0.004 |

| DesignedBy | 63 | 0.004 |

| CollaboratedWith | 48 | 0.003 |

| KnewOf | 42 | 0.002 |

| TravelledTo | 28 | 0.002 |

| Awarded | 22 | 0.001 |

| ClientOf | 21 | 0.001 |

| MentoredBy | 18 | 0.001 |

| Founded | 16 | 0.001 |

| Became | 15 | 0.001 |

| WasInfluenceBy | 14 | 0.001 |

| Attended | 12 | 0.001 |

| TaughtAt | 9 | 0.001 |

| MergedWith | 7 | 0.000 |

| Read | 6 | 0.000 |

| Authored | 6 | 0.000 |

Show code cell source

def fetch_relation_details(relation_types, relations=relations):

this_relation = relations[relations['relation_class'].isin(relation_types)].fillna('-')

print(f'Total number of {relation_types[0].replace("Related","").replace("_","-")} relations:', this_relation.shape[0])

display(this_relation[['subject._class_ori','object._class_ori','predicate.term']]\

.value_counts()\

.reset_index()\

.rename(columns={0:'Frequency'})\

.sort_values('Frequency', ascending=False))

print('###################### PERSON RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Person_RelatedWork','Work_RelatedPerson'])

print('\n')

fetch_relation_details(['Person_RelatedPerson'])

print('\n')

fetch_relation_details(['Person_RelatedOrganization','Organization_RelatedPerson'])

print('\n')

fetch_relation_details(['Person_RelatedResource','Resource_RelatedPerson'])

print('\n')

fetch_relation_details(['Person_RelatedRecognition'])

print('\n')

fetch_relation_details(['Person_RelatedPlace'])

print('\n')

print('###################### WORK RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Work_RelatedResource','Resource_RelatedWork'])

print('\n')

fetch_relation_details(['Work_RelatedPlace'])

print('\n')

fetch_relation_details(['Work_RelatedRecognition'])

print('\n')

print('###################### ORGANISATION RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Organization_RelatedWork','Work_RelatedOrganization'])

print('\n')

fetch_relation_details(['Organization_RelatedOrganization'])

print('\n')

fetch_relation_details(['Recognition_RelatedOrganization'])

print('###################### RESOURCE RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Resource_RelatedResource'])

print('\n')

fetch_relation_details(['Resource_RelatedOrganization','Organization_RelatedResource'])

print('\n')

fetch_relation_details(['Resource_RelatedPlace'])

print('\n')

fetch_relation_details(['Resource_RelatedRecognition'])

print('\n')

###################### PERSON RELATIONSHIPS ######################

Total number of Person-Work relations: 2344

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | structure | WorkedOn | 2157 |

| 1 | architect | structure | Reference | 97 |

| 2 | structure | architect | DesignedBy | 30 |

| 3 | architect | structure | InfluencedBy | 20 |

| 4 | architect | structure | StudiedAt | 8 |

| 5 | architect | structure | Employment | 7 |

| 6 | architect | structure | TravelledTo | 6 |

| 7 | architect | structure | TaughtAt | 4 |

| 9 | architect | structure | Attended | 3 |

| 8 | architect | structure | RelatedTo | 3 |

| 10 | architect | structure | KnewOf | 2 |

| 11 | non-architect | structure | Reference | 2 |

| 12 | architect | structure | Read | 1 |

| 13 | architect | structure | ClientOf | 1 |

| 14 | architect | structure | WorkedWith | 1 |

| 15 | non-architect | structure | WorkedOn | 1 |

| 16 | structure | architect | ClientOf | 1 |

Total number of Person-Person relations: 1952

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | architect | WorkedWith | 359 |

| 1 | architect | architect | TaughtBy | 323 |

| 2 | architect | architect | Reference | 219 |

| 3 | architect | architect | StudiedWith | 210 |

| 4 | architect | architect | InfluencedBy | 192 |

| 5 | architect | architect | KnewSocially | 171 |

| 6 | architect | architect | Employment | 97 |

| 7 | architect | architect | KnewProfessionally | 97 |

| 8 | architect | architect | KnewOf | 36 |

| 9 | architect | non-architect | KnewProfessionally | 32 |

| 10 | architect | non-architect | StudiedWith | 31 |

| 11 | architect | non-architect | KnewSocially | 29 |

| 12 | architect | non-architect | TaughtBy | 24 |

| 13 | architect | non-architect | CollaboratedWith | 16 |

| 14 | architect | architect | MentoredBy | 16 |

| 15 | architect | non-architect | WorkedWith | 15 |

| 17 | architect | non-architect | Reference | 14 |

| 16 | architect | architect | CollaboratedWith | 14 |

| 18 | architect | architect | WasInfluenceBy | 12 |

| 19 | non-architect | architect | ClientOf | 10 |

| 20 | architect | non-architect | InfluencedBy | 8 |

| 21 | architect | architect | PartnerOf | 8 |

| 22 | architect | architect | ClientOf | 4 |

| 23 | non-architect | non-architect | Employment | 2 |

| 24 | non-architect | non-architect | KnewProfessionally | 2 |

| 25 | architect | non-architect | Employment | 2 |

| 26 | architect | non-architect | MentoredBy | 2 |

| 27 | architect | architect | RelatedTo | 2 |

| 28 | non-architect | non-architect | KnewSocially | 2 |

| 29 | non-architect | architect | Employment | 1 |

| 30 | non-architect | architect | Reference | 1 |

| 31 | architect | non-architect | KnewOf | 1 |

Total number of Person-Organization relations: 1575

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | firm | Employment | 1137 |

| 1 | architect | education | StudiedAt | 108 |

| 2 | architect | firm | PartnerOf | 75 |

| 3 | architect | firm | Reference | 55 |

| 4 | architect | education | Employment | 35 |

| 5 | architect | firm | InfluencedBy | 19 |

| 6 | architect | firm | Founded | 15 |

| 7 | architect | firm | WorkedWith | 14 |

| 8 | architect | firm | TaughtBy | 10 |

| 9 | architect | firm | CollaboratedWith | 9 |

| 10 | architect | education | Reference | 9 |

| 11 | architect | organisation | Became | 8 |

| 15 | architect | education | TaughtAt | 5 |

| 17 | architect | education | InfluencedBy | 5 |

| 16 | architect | education | CollaboratedWith | 5 |

| 13 | architect | government | WorkedWith | 5 |

| 14 | architect | education | Attended | 5 |

| 12 | architect | organisation | Reference | 5 |

| 18 | architect | organisation | RelatedTo | 4 |

| 19 | non-architect | firm | Employment | 4 |

| 20 | architect | organisation | WorkedWith | 3 |

| 21 | architect | education | Read | 3 |

| 27 | architect | education | Authored | 2 |

| 31 | architect | firm | StudiedWith | 2 |

| 30 | non-architect | firm | Reference | 2 |

| 29 | architect | education | TravelledTo | 2 |

| 28 | architect | firm | MergedWith | 2 |

| 24 | architect | education | WorkedWith | 2 |

| 26 | architect | organisation | Attended | 2 |

| 23 | firm | architect | ClientOf | 2 |

| 22 | architect | organisation | Employment | 2 |

| 25 | firm | architect | KnewOf | 2 |

| 40 | architect | education | ClientOf | 1 |

| 47 | architect | education | WorkedOn | 1 |

| 46 | architect | firm | KnewOf | 1 |

| 45 | architect | firm | KnewProfessionally | 1 |

| 44 | architect | firm | KnewSocially | 1 |

| 43 | architect | education | RelatedTo | 1 |

| 42 | architect | firm | WasInfluenceBy | 1 |

| 41 | architect | government | Employment | 1 |

| 38 | architect | education | Awarded | 1 |

| 39 | architect | organisation | CollaboratedWith | 1 |

| 37 | architect | firm | ClientOf | 1 |

| 36 | firm | architect | Became | 1 |

| 35 | non-architect | education | StudiedAt | 1 |

| 34 | non-architect | education | Employment | 1 |

| 33 | firm | architect | CollaboratedWith | 1 |

| 32 | firm | architect | MergedWith | 1 |

| 48 | non-architect | organisation | Founded | 1 |

Total number of Person-Resource relations: 1281

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | architect | Reference | 364 |

| 1 | architect | Photograph | HasMedia | 257 |

| 2 | architect | interview | IsInvolvedIn | 91 |

| 3 | interview | architect | RelatedTo | 86 |

| 4 | non-architect | interview | RelatedTo | 86 |

| 5 | interview | non-architect | RelatedTo | 85 |

| 6 | architect | interview | RelatedTo | 82 |

| 7 | architect | Image | HasMedia | 80 |

| 8 | interview | non-architect | Reference | 75 |

| 9 | architect | Portrait | HasMedia | 66 |

| 10 | non-architect | interview | IsInvolvedIn | 6 |

| 11 | architect | LineDrawing | HasMedia | 1 |

| 12 | architect | publication | Reference | 1 |

| 13 | non-architect | Photograph | HasMedia | 1 |

Total number of Person-Recognition relations: 60

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | award | Awarded | 20 |

| 1 | architect | award | TravelledTo | 15 |

| 2 | architect | award | Reference | 8 |

| 3 | architect | award | Became | 5 |

| 4 | architect | award | Authored | 3 |

| 5 | architect | award | Attended | 2 |

| 6 | architect | award | Read | 2 |

| 7 | architect | award | RelatedTo | 1 |

| 8 | architect | award | WasInfluenceBy | 1 |

| 9 | architect | award | WorkedWith | 1 |

| 10 | non-architect | award | Authored | 1 |

| 11 | non-architect | award | Reference | 1 |

Total number of Person-Place relations: 7

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | place | TravelledTo | 5 |

| 1 | architect | place | Reference | 1 |

| 2 | architect | place | RelatedTo | 1 |

###################### WORK RELATIONSHIPS ######################

Total number of Work-Resource relations: 4979

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | structure | Photograph | HasMedia | 3143 |

| 1 | structure | LineDrawing | HasMedia | 1088 |

| 2 | structure | Image | HasMedia | 651 |

| 3 | interview | structure | Reference | 90 |

| 4 | structure | Portrait | HasMedia | 7 |

Total number of Work-Place relations: 1843

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | structure | place | LocatedIn | 1843 |

Total number of Work-Recognition relations: 1

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | structure | award | Awarded | 1 |

###################### ORGANISATION RELATIONSHIPS ######################

Total number of Organization-Work relations: 1661

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | firm | structure | WorkedOn | 1343 |

| 1 | structure | firm | RelatedTo | 144 |

| 2 | firm | structure | RelatedTo | 141 |

| 3 | structure | firm | DesignedBy | 33 |

Total number of Organization-Organization relations: 420

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | firm | firm | PrecededBy | 221 |

| 1 | firm | firm | succeededby | 189 |

| 2 | firm | firm | MergedWith | 4 |

| 3 | firm | firm | CollaboratedWith | 2 |

| 4 | firm | firm | WorkedWith | 2 |

| 5 | firm | firm | Became | 1 |

| 6 | government | firm | ClientOf | 1 |

Total number of Recognition-Organization relations: 1

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | award | education | StudiedAt | 1 |

###################### RESOURCE RELATIONSHIPS ######################

Total number of Resource-Resource relations: 924

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | article | Article | HasMedia | 646 |

| 1 | interview | Audio | HasMedia | 127 |

| 2 | interview | Transcript | HasMedia | 83 |

| 3 | interview | Youtube | HasMedia | 45 |

| 4 | interview | Video | HasMedia | 22 |

| 5 | interview | publication | Reference | 1 |

Total number of Resource-Organization relations: 278

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | education | Reference | 121 |

| 1 | interview | firm | Reference | 68 |

| 2 | firm | Photograph | HasMedia | 57 |

| 3 | firm | Image | HasMedia | 15 |

| 4 | firm | Portrait | HasMedia | 9 |

| 5 | interview | organisation | Reference | 6 |

| 6 | firm | LineDrawing | HasMedia | 2 |

Total number of Resource-Place relations: 77

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | place | DoneIn | 77 |

Total number of Resource-Recognition relations: 48

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | award | Reference | 48 |

Projects & firms#

Below are some statistics about project characteristics and firms in the DAQA dataset. Proportions under the PROJECTS subheading are calculated as a percentage of the total number of projects in the dataset. Proportions under the FIRMS subheading are calculated as a percentage of the total number of firms in the dataset.

It should be noted that all people related to projects are architects, we found no non-architects in the project records. Also all organisations related to projects are firms, we found no non-firm organisations in the project records.

We define a project with an address as one that has a populated

addressfieldWe define a project with a geocode date as one that has a populated

longitudeandlatitudefieldWe define a project with a completion date as one that has a populated

completion yearfieldWe define a project with an associated firm as one that has a populated

related_organizationsfieldWe define a project with no associated firms as one that has a no populated

related organizationsfieldWe define a project with an associated architect as one that has a populated

related_peoplefieldWe define a project with no associated architects as one that has no populated

related_peoplefieldWe define a firm with operating years as an organisation that has a populated

operationfield

Show code cell source

print('###################### PROJECTS ######################')

# load data

daqa_work = df_daqa_dict['work']

print('\nQ: How many projects are recorded in DAQA?')

count_projects = len(daqa_work)

print(f'A: There are {count_projects} projects in DAQA.')

# we define a project with an address as one that has a populated "address" field

print('\nQ: what % of projects have addresses?')

count_projects_with_address = len(daqa_work[daqa_work.coverage_range.apply(lambda x: "address" in x)])

prop_projects_with_address = round((count_projects_with_address / count_projects) * 100, 2)

print(f'A: {prop_projects_with_address}% ({count_projects_with_address}) of DAQA projects have addresses.')

# we define a project with a geocode date as one that has a populated "longitude" field

print('\nQ: what % of projects have geocodes (lat/long)?')

count_projects_with_geocodes = len(daqa_work[daqa_work.coverage_range.apply(lambda x: "latitude" in x)])

prop_projects_with_geocodes = round((count_projects_with_geocodes / count_projects) * 100, 2)

print(f'A: {prop_projects_with_geocodes}% ({count_projects_with_geocodes}) of DAQA projects have geocodes.')

# we define a project with a completion date as one that has a populated "completion year" field

print('\nQ: what % of projects have completion dates?')

count_projects_with_completion_dates = len(daqa_work[daqa_work.coverage_range.apply(lambda x: "date_end" in x)])

prop_projects_with_completion_dates = round((count_projects_with_completion_dates / count_projects) * 100, 2)

print(f'A: {prop_projects_with_completion_dates}% ({count_projects_with_completion_dates}) of DAQA projects have completion dates.')

# we conduct a sanity check to see if related people in daqa_work are all architects, we find no non-architects

# # load data

# daqa_persons = df_daqa_dict['person']

# non_architects = daqa_persons[daqa_persons['longterm_roles'].str.contains('non-architect')]['ori_id'].unique()

# len(daqa_work[daqa_work.related_people.apply(lambda x: pd.json_normalize(eval(x))['subject.ori_id'].values[0] in non_architects if isinstance(x, str) else False)])

# we conduct a sanity check to see if related organisations in daqa_work are all firms, we find no non-firms

# count_related_organizations_firms = len(daqa_work[daqa_work.related_organizations.apply(lambda x: "firm" in x if isinstance(x, str) else False)])

# count_related_organizations = len(daqa_work[daqa_work.related_organizations.notnull()])

# count_related_organizations_firms == count_related_organizations

# we define a project with an associated firm as one that has a populated "related_organizations" field

print('\nQ: how many projects have associated firms?')

count_projects_with_firms = len(daqa_work[daqa_work.related_organizations.notnull()])

prop_projects_with_firms = round((count_projects_with_firms / count_projects) * 100, 2)

print(f'A: {prop_projects_with_firms}% ({count_projects_with_firms}) of DAQA projects have associated firms.')

# we define a project with no associated architects as one that has no populated "related_people" field

print('\nQ: what % of projects have associated firms but no architects?')

count_projects_with_firms_no_architects = len(daqa_work[(daqa_work.related_organizations.notnull()) &\

(daqa_work.related_people.isnull())])

prop_projects_with_firms_no_architects = round((count_projects_with_firms_no_architects / count_projects) * 100, 2)

print(f'A: {prop_projects_with_firms_no_architects}% ({count_projects_with_firms_no_architects}) of DAQA projects have associated firms but no architects.')

# we define a project with an associated architect as one that has a populated "related_people" field

print('\nQ: how many projects have associated architects?')

count_projects_with_architects = len(daqa_work[daqa_work.related_people.notnull()])

prop_projects_with_architects = round((count_projects_with_architects / count_projects) * 100, 2)

print(f'A: {prop_projects_with_architects}% ({count_projects_with_architects}) of DAQA projects have associated architects.')

# we define a project with an associated architects as one that has a populated "related people" field

# and we define a project with no associated firms as one that has a no populated "related organizations" field

print('\nQ: what % of projects have associated architects but no firms?')

count_projects_with_architects_no_firms = len(daqa_work[(daqa_work.related_organizations.isnull()) &\

(daqa_work.related_people.notnull())])

prop_projects_with_architects_no_firms = round((count_projects_with_architects_no_firms / count_projects) * 100, 2)

print(f'A: {prop_projects_with_architects_no_firms}% ({count_projects_with_architects_no_firms}) of DAQA projects have associated architects but no firms.')

print('\n###################### FIRMS ######################')

# load data

daqa_orgs = df_daqa_dict['organization']

daqa_firms = daqa_orgs[daqa_orgs['_class_ori'].str.contains('firm')].copy()

print('\nQ: How many firms are recorded in DAQA?')

count_firms = len(daqa_firms)

print(f'A: There are {count_firms} firms in DAQA.')

# we define an operating firm as an organisation that has a populated "operation" field with a start date

print('\nQ: what % of firms have ‘operating years’ recorded (just start)?')

count_firms_with_operating_start = len(daqa_firms[daqa_firms.operation.apply(lambda x: "date_start" in x if isinstance(x, str) else False)])

prop_firms_with_operating_start = round((count_firms_with_operating_start / count_firms) * 100, 2)

print(f'A: {prop_firms_with_operating_start}% ({count_firms_with_operating_start}) of DAQA firms have operating years recorded.')

# we define an operating firm as an organisation that has a populated "operation" field with start and end dates

print('\nQ: what % of firms have ‘operating years’ recorded (start and end)?')

count_firms_with_operating_years = len(daqa_firms[daqa_firms.operation.apply(lambda x: ("date_start" in x) & ("date_end" in x) if isinstance(x, str) else False)])

prop_firms_with_operating_years = round((count_firms_with_operating_years / count_firms) * 100, 2)

print(f'A: {prop_firms_with_operating_years}% ({count_firms_with_operating_years}) of DAQA firms have operating years recorded.')

# top 5 firms - longest timespan

daqa_firms_with_op_yrs = daqa_firms[daqa_firms.operation.apply(lambda x: "date_start" in x if isinstance(x, str) else False)]

# extract the start and end years from the "operation" field

start_dates = []; end_dates = []

for index, row in daqa_firms_with_op_yrs.iterrows():

start_dates.append(int(pd.json_normalize(ast.literal_eval(row['operation']))['date_start.year'].values[0]))

try: end_dates.append(int(pd.json_normalize(ast.literal_eval(row['operation']))['date_end.year'].values[0]))

except: end_dates.append(None)

daqa_firms_with_op_yrs['start_yr'] = start_dates

daqa_firms_with_op_yrs['end_yr'] = end_dates

daqa_firms_with_op_yrs['diff'] = abs(daqa_firms_with_op_yrs['end_yr'] - daqa_firms_with_op_yrs['start_yr'])

print('\nQ: What are the top five firms with the longest timespan with the same name? (must have operating start and end years)')

display(daqa_firms_with_op_yrs.sort_values(by='diff', ascending=False)\

.head(5)[['primary_name', 'start_yr', 'end_yr', 'diff']])

###################### PROJECTS ######################

Q: How many projects are recorded in DAQA?

A: There are 2203 projects in DAQA.

Q: what % of projects have addresses?

A: 84.29% (1857) of DAQA projects have addresses.

Q: what % of projects have geocodes (lat/long)?

A: 65.68% (1447) of DAQA projects have geocodes.

Q: what % of projects have completion dates?

A: 60.37% (1330) of DAQA projects have completion dates.

Q: how many projects have associated firms?

A: 58.87% (1297) of DAQA projects have associated firms.

Q: what % of projects have associated firms but no architects?

A: 14.21% (313) of DAQA projects have associated firms but no architects.

Q: how many projects have associated architects?

A: 81.03% (1785) of DAQA projects have associated architects.

Q: what % of projects have associated architects but no firms?

A: 36.36% (801) of DAQA projects have associated architects but no firms.

###################### FIRMS ######################

Q: How many firms are recorded in DAQA?

A: There are 907 firms in DAQA.

Q: what % of firms have ‘operating years’ recorded (just start)?

A: 79.93% (725) of DAQA firms have operating years recorded.

Q: what % of firms have ‘operating years’ recorded (start and end)?

A: 39.69% (360) of DAQA firms have operating years recorded.

Q: What are the top five firms with the longest timespan with the same name? (must have operating start and end years)

| primary_name | start_yr | end_yr | diff | |

|---|---|---|---|---|

| 21223 | "Evans Deakin & Company (Engineers & Shipbuild... | 1910 | 1980.0 | 70.0 |

| 21594 | "Bates Smart & McCutcheon" | 1926 | 1995.0 | 69.0 |

| 21165 | "Brown and Broad LTD" | 1905 | 1967.0 | 62.0 |

| 20909 | "Queensland Housing Commission" | 1945 | 2004.0 | 59.0 |

| 21164 | "George Brockwell Gill Architect and Agent" | 1889 | 1943.0 | 54.0 |

Top five firms with the longest timespan with successive names/known predecessor firms.#

We inspect the top five firms with the longest timespan with successive names/known predecessor firms. We use network graphs to visualise the predecessor/successor relationships between firms. The graphs are interactive, so you can click on the nodes to see the firm names and the years they were active.

Show code cell source

daqa_firms_with_related_orgs = daqa_firms[daqa_firms.related_organizations.notnull()]

related_orgs_df = pd.DataFrame()

for index, row in daqa_firms_with_related_orgs.iterrows():

this_row_id = int(row['ori_id'])

this_org_related = pd.json_normalize(ast.literal_eval(row['related_organizations']))

related_orgs_df = related_orgs_df.append(this_org_related)

related_orgs_df.drop_duplicates(inplace=True)

related_orgs_df = related_orgs_df[related_orgs_df['predicate.term'].isin(['succeededby', 'PrecededBy','MergedWith'])]

related_orgs_ls = related_orgs_df[['subject.ori_id','object.ori_id']].values.tolist()

def get_connected_nodes(snapshot):

connected_nodes = []

for node_list in snapshot:

connected_node_set = set(node_list)

for connected_node in connected_nodes:

if connected_node & connected_node_set:

connected_node |= connected_node_set

break

else:

connected_nodes.append(connected_node_set)

connected_node_lists = [list(connected_node) for connected_node in connected_nodes]

return connected_node_lists

connected_node_lists = get_connected_nodes(related_orgs_ls)

connected_node_lists = get_connected_nodes(connected_node_lists)

connected_node_lists = get_connected_nodes(connected_node_lists)

first_date = []

last_date = []

for nodes in connected_node_lists[0:]:

this_nodes = daqa_firms_with_op_yrs[daqa_firms_with_op_yrs['ori_id'].astype(int).isin(nodes)]

all_dates = this_nodes['start_yr'].to_list()

all_dates.extend(this_nodes['start_yr'].to_list())

if len(all_dates) > 0:

first_date.append(min(all_dates))

last_date.append(max(all_dates))

else:

first_date.append(None)

last_date.append(None)

# dates

dts = pd.DataFrame([first_date, last_date]).T

# add nodes as column

dts.columns = ['first_date', 'last_date']

dts['nodes'] = connected_node_lists

dts['diff'] = dts['last_date'] - dts['first_date']

display(dts.sort_values(by='diff', ascending=False).head(5))

| first_date | last_date | nodes | diff | |

|---|---|---|---|---|

| 61 | 1862 | 1967 | [4795, 3838, 3846] | 105 |

| 4 | 1893 | 1995 | [2310, 2446, 2447, 2449, 4628, 2456, 2338, 502... | 102 |

| 2 | 1864 | 1963 | [5033, 2387, 2356, 2457, 4890, 4891, 4892, 4286] | 99 |

| 17 | 1926 | 2010 | [4576, 2497, 3868, 2440, 4459, 4460, 2445, 446... | 84 |

| 28 | 1938 | 2002 | [2336, 4994, 2501, 4511, 2413, 2478, 4527, 363... | 64 |

Firm 1 (105 years)

Show code cell source

def output_graph(data, iteration = 0):

import networkx as nx

from pyvis import network as net

g = net.Network(notebook=True,

height='500px',

width="100%",

cdn_resources = 'remote',

select_menu=True)

df = related_orgs_df[related_orgs_df['subject.ori_id']\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[iteration]['nodes'])][['subject.label','object.label','predicate.term']]

dd = daqa_firms_with_op_yrs.copy()

dd['primary_name'] = dd['primary_name'].apply(lambda x: ast.literal_eval(x))

# Iterate over each row in the dataset

for _, row in df.iterrows():

subject = row['subject.label']

predicate = row['predicate.term']

obj = row['object.label']

try:

sub_start = dd[dd['primary_name'].str.contains(subject)]['start_yr'].values[0]

sub_end = dd[dd['primary_name'].str.contains(subject)]['end_yr'].values[0]

except:

sub_start = None

sub_end = None

try:

obj_start = dd[dd['primary_name'].str.contains(obj)]['start_yr'].values[0]

obj_end = dd[dd['primary_name'].str.contains(obj)]['end_yr'].values[0]

except:

obj_start = None

obj_end = None

g.add_node(subject, subject, title=f"{subject} \nStart: {sub_start} \nEnd: {sub_end}", color='blue')

g.add_node(obj, obj, title=f"{obj} \nStart: {obj_start} \nEnd: {obj_end}", color='blue')

# Add edges to the graph based on the relationship type

if predicate == 'succeededby':

g.add_edge(subject, obj, color='red', title=predicate, arrows='to')

elif predicate == 'PrecededBy':

g.add_edge(obj, subject, color='red', title='succeededby', arrows='to')

elif predicate == 'MergedWith':

g.add_edge(subject, obj, color='green', title=predicate, width=3)

g.add_edge(obj, subject, color='green', title=predicate, width=3)

var_options = """var_options = {

"nodes": {"font": {"size": 12}},

"interaction": {"hover": "True"}}

"""

g.set_options(var_options)

return g

display(daqa_firms_with_op_yrs[daqa_firms_with_op_yrs['ori_id'].astype(int)\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[0]['nodes'])][['primary_name','ori_id','start_yr','end_yr']]\

.sort_values(by='start_yr', ascending=True))

g = output_graph(related_orgs_df[related_orgs_df['subject.ori_id']\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[0]['nodes'])][['subject.label','object.label','predicate.term']], 0)

print('\n')

g.show(f"networkgraph_0.html")

| primary_name | ori_id | start_yr | end_yr | |

|---|---|---|---|---|

| 21757 | "Backhouse & Taylor" | 3838.0 | 1862 | 1863.0 |

| 21759 | "Furnival & Taylor" | 3846.0 | 1864 | NaN |

| 21265 | "T Taylor" | 4795.0 | 1967 | NaN |

Firm 2 (102 years)

Show code cell source

display(daqa_firms_with_op_yrs[daqa_firms_with_op_yrs['ori_id'].astype(int)\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[1]['nodes'])][['primary_name','ori_id','start_yr','end_yr']]\

.sort_values(by='start_yr', ascending=True))

g = output_graph(related_orgs_df[related_orgs_df['subject.ori_id']\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[1]['nodes'])][['subject.label','object.label','predicate.term']], 1)

print('\n')

g.show(f"networkgraph_1.html")

| primary_name | ori_id | start_yr | end_yr | |

|---|---|---|---|---|

| 20931 | "C.W. Chambers Architect and Consulting Engineer" | 4415.0 | 1893 | 1910.0 |

| 20932 | "McCredie Bros & Chambers" | 4416.0 | 1899 | 1892.0 |

| 21556 | "H.W. Atkinson & Chas. McLay" | 2338.0 | 1907 | 1918.0 |

| 21715 | "Chambers and Powell" | 2499.0 | 1911 | 1920.0 |

| 21273 | "Arnold Henry Conrad Architect" | 4808.0 | 1917 | 1917.0 |

| 20911 | "Atkinson and Conrad (1918-1927)" | 4316.0 | 1918 | 1927.0 |

| 21673 | "H.W. Atkinson & A.H. Conrad (1918-1927)" | 2456.0 | 1918 | 1927.0 |

| 21531 | "Chambers & Ford" | 2310.0 | 1920 | 1935.0 |

| 20934 | "Powell & Hutton Architects" | 4419.0 | 1922 | 1924.0 |

| 21626 | "Lange Powell Architect" | 2408.0 | 1924 | 1927.0 |

| 21591 | "Atkinson, Powell & Conrad (1927-1931)" | 2373.0 | 1927 | 1931.0 |

| 21572 | "H.W Atkinson & A.H Conrad (1931-1939)" | 2354.0 | 1931 | 1939.0 |

| 20933 | "Lange L Powell & Geo Rae Architects" | 4417.0 | 1931 | 1933.0 |

| 21716 | "Chambers & Hutton" | 2500.0 | 1931 | 1940.0 |

| 21637 | "Lange Powell" | 2419.0 | 1933 | 1938.0 |

| 21691 | "(Lange L.) Powell, Dods & Thorpe (PDT)" | 2475.0 | 1938 | NaN |

| 21664 | "A.H Conrad & T.B.F Gargett" | 2447.0 | 1939 | 1965.0 |

| 21612 | "Ford, Hutton & Newell" | 2394.0 | 1952 | 1958.0 |

| 21595 | "Lund, Hutton & Newell" | 2377.0 | 1959 | 1959.0 |

| 21704 | "Lund, Hutton, Newell, Black & Paulsen" | 2488.0 | 1960 | 1964.0 |

| 21296 | "Ian Black and Company (Cairns)" | 4852.0 | 1964 | NaN |

| 21666 | "Conrad Gargett & Partners (1965-1972)" | 2449.0 | 1964 | 1966.0 |

| 21104 | "Lund Hutton Newell Paulsen Pty Ltd" | 4628.0 | 1965 | NaN |

| 21663 | "Conrad Gargett & Partners (1972-1995)" | 2446.0 | 1972 | 1995.0 |

| 21418 | "Conrad & Gargett" | 5027.0 | 1995 | NaN |

Firm 3 (99 years)

Show code cell source

display(daqa_firms_with_op_yrs[daqa_firms_with_op_yrs['ori_id'].astype(int)\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[2]['nodes'])][['primary_name','ori_id','start_yr','end_yr']]\

.sort_values(by='start_yr', ascending=True))

g = output_graph(related_orgs_df[related_orgs_df['subject.ori_id']\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[2]['nodes'])][['subject.label','object.label','predicate.term']], 2)

print('\n')

g.show(f"networkgraph_2.html")

| primary_name | ori_id | start_yr | end_yr | |

|---|---|---|---|---|

| 21605 | "John Hall & Son" | 2387.0 | 1864 | 1896.0 |

| 21574 | "Hall & Dods" | 2356.0 | 1896 | 1916.0 |

| 21324 | "HENNESSY AND HENNESSY AND F.R. HALL" | 4890.0 | 1916 | NaN |

| 21325 | "F.R. HALL AND W. ALAN DEVEREUX" | 4891.0 | 1923 | 1927.0 |

| 21326 | "F R Hall Architect" | 4892.0 | 1927 | 1930.0 |

| 20907 | "F.R Hall and Cook" | 4286.0 | 1930 | 1939.0 |

| 21674 | "Harold M Cook & Walter J E Kerrison" | 2457.0 | 1939 | 1962.0 |

| 21424 | "Cook & Kerrison & Partners" | 5033.0 | 1963 | NaN |

Firm 4 (84 years)

Show code cell source

display(daqa_firms_with_op_yrs[daqa_firms_with_op_yrs['ori_id'].astype(int)\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[3]['nodes'])][['primary_name','ori_id','start_yr','end_yr']]\

.sort_values(by='start_yr', ascending=True))

g = output_graph(related_orgs_df[related_orgs_df['subject.ori_id']\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[3]['nodes'])][['subject.label','object.label','predicate.term']], 3)

print('\n')

g.show(f"networkgraph_3.html")

| primary_name | ori_id | start_yr | end_yr | |

|---|---|---|---|---|

| 21731 | "Arthur W. F. Bligh" | 3574.0 | 1926 | NaN |

| 21763 | "Bligh & Jessup" | 3870.0 | 1947 | 1952.0 |

| 20976 | "Bligh Jessup & Partners" | 4499.0 | 1953 | 1956.0 |

| 21662 | "Bligh Jessup Bretnall & Partners" | 2445.0 | 1957 | NaN |

| 21052 | "Colin W Jessup" | 4576.0 | 1961 | 1975.0 |

| 20944 | "Callaghan Robinson Architects" | 4459.0 | 1972 | 1972.0 |

| 20945 | "Noel Robinson Architects (& Partners)" | 4460.0 | 1973 | 1977.0 |

| 20946 | "Noel Robinson Built Environments" | 4461.0 | 1977 | 1986.0 |

| 21713 | "Bligh Jessup Robinson" | 2497.0 | 1987 | 1960.0 |

| 20947 | "Bligh Robinson" | 4462.0 | 1989 | 1990.0 |

| 21658 | "Noel Robinson Architects (2)" | 2440.0 | 1990 | 1999.0 |

| 21614 | "Bligh Voller Nield" | 2396.0 | 1997 | 2009.0 |

| 20948 | "Design Inc" | 4463.0 | 2000 | 2010.0 |

| 21761 | "BVN Architecture" | 3868.0 | 2009 | NaN |

| 20949 | "NRACOLAB" | 4464.0 | 2010 | 2020.0 |

Firm 5 (64 years)

Show code cell source

display(daqa_firms_with_op_yrs[daqa_firms_with_op_yrs['ori_id'].astype(int)\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[4]['nodes'])][['primary_name','ori_id','start_yr','end_yr']]\

.sort_values(by='start_yr', ascending=True))

g = output_graph(related_orgs_df[related_orgs_df['subject.ori_id']\

.isin(dts.sort_values(by='diff', ascending=False)\

.iloc[4]['nodes'])][['subject.label','object.label','predicate.term']], 4)

print('\n')

g.show(f"networkgraph_4.html")

| primary_name | ori_id | start_yr | end_yr | |

|---|---|---|---|---|

| 21741 | "Job & Collin" | 3636.0 | 1938 | 1954.0 |

| 21694 | "C W T Fulton in Assn with A H Job & J M Collin" | 2478.0 | 1954 | 1955.0 |

| 21649 | "Aubrey H. Job & R. P. Froud (Job & Froud)" | 2431.0 | 1955 | 1976.0 |

| 21738 | "C W T Fulton in Assn with J M Collin" | 3633.0 | 1955 | 1960.0 |

| 20988 | "JM Collin & CWT Fulton" | 4511.0 | 1961 | 1966.0 |

| 21014 | "J G Gilmour" | 4537.0 | 1961 | 1966.0 |

| 21386 | "G B Boys" | 4994.0 | 1961 | 1966.0 |

| 21717 | "J M Collin & C W T Fulton" | 2501.0 | 1961 | 1966.0 |

| 21631 | "Fulton Collin Boys Gilmour Trotter & Partners" | 2413.0 | 1966 | 1981.0 |

| 21476 | "C W T Fulton" | 5085.0 | 1967 | NaN |

| 21004 | "RP Froud Architect" | 4527.0 | 1974 | 1986.0 |

| 21739 | "Fulton Gilmour Trotter & Moss" | 3634.0 | 1981 | 1998.0 |

| 21740 | "Fulton Trotter Moss" | 3635.0 | 1998 | 2002.0 |

| 21554 | "Fulton Trotter Architects" | 2336.0 | 2002 | NaN |

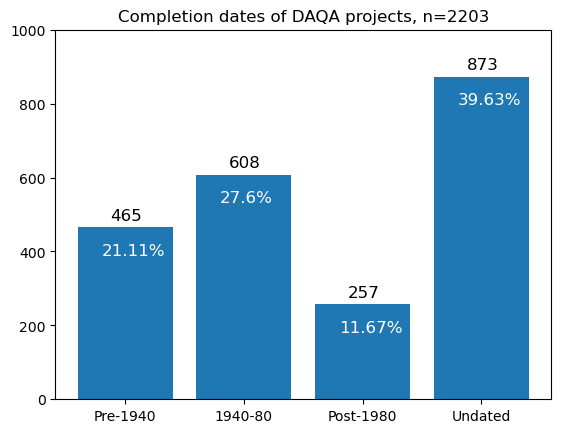

Projects by completion date#

Below are some temporal statistics about completed projects in the DAQA dataset. We divide the data into four periods: before 1940, between 1940-1980, post 1980, and undated. Proportions are calculated as a percentage of the total number of completed projects in the dataset.

Show code cell source

# store all rows with date_start in coverage_range

completion_dates_dict = {'Pre-1940': 0, '1940-80': 0, 'Post-1980': 0, 'Undated': 0}

projects_1940_80 = []

projects_1940_80_firms_dict = dict()

firms_with_projects_1940_80 = []

person_with_projects_1940_80 = []

resource_with_projects_1940_80 = []

for idx,row in daqa_work.iterrows():

if isinstance(row['coverage_range'], str):

if "date_end" in row['coverage_range']:

comp_yr = pd.json_normalize(ast.literal_eval(row['coverage_range'])['date_range'])['date_end.year'].values[0]

# add counter for comp_yr to dict

if int(comp_yr) < 1940: completion_dates_dict['Pre-1940'] += 1

elif int(comp_yr) >= 1940 and int(comp_yr) <= 1980:

completion_dates_dict['1940-80'] += 1

projects_1940_80.append(row['_id'])

# add related firm to list

if isinstance(row['related_organizations'], str):

related_firm = pd.json_normalize(ast.literal_eval(row['related_organizations']))['subject.ori_id'].values[0]

firms_with_projects_1940_80.append(related_firm)

projects_1940_80_firms_dict[row['_id']] = related_firm

# add related person to list

if isinstance(row['related_people'], str):

related_person = pd.json_normalize(ast.literal_eval(row['related_people']))['subject.ori_id'].values[0]

person_with_projects_1940_80.append(related_person)

# add related firm to list

if isinstance(row['related_resources'], str):

related_resource = pd.json_normalize(ast.literal_eval(row['related_resources']))['object.ori_id'].values[0]

resource_with_projects_1940_80.append(related_resource)

elif int(comp_yr) > 1980: completion_dates_dict['Post-1980'] += 1

else:

completion_dates_dict['Undated'] += 1

# number of projects in DAQA

print('\nThere are {} projects in DAQA.'.format(count_projects))

# number of pre-1940 projects

print('There are {} ({}%) projects with completion dates before 1940.'.\

format(completion_dates_dict['Pre-1940'], round((completion_dates_dict['Pre-1940']/count_projects)*100,2)))

# number of 1940-1980 projects

print('There are {} ({}%) projects with completion dates between 1940 and 1980.'.\

format(completion_dates_dict['1940-80'], round((completion_dates_dict['1940-80']/count_projects)*100,2)))

# number of post-1980 projects

print('There are {} ({}%) projects with completion dates after 1980.'.\

format(completion_dates_dict['Post-1980'], round((completion_dates_dict['Post-1980']/count_projects)*100,2)))

# number of undated projects

print('There are {} ({}%) projects with no completion dates.'\

.format(completion_dates_dict['Undated'], round((completion_dates_dict['Undated']/count_projects)*100,2)))

print('\n')

# plot completion_dates_dict as a bar chart

plt.bar(range(len(completion_dates_dict)), list(completion_dates_dict.values()), align='center')

plt.xticks(range(len(completion_dates_dict)), list(completion_dates_dict.keys()))

plt.title('Completion dates of DAQA projects, n=2203')

# add labels to bars with propotion in white inside top of bar

for i, v in enumerate(completion_dates_dict.values()):

plt.text(i - 0.125, v + 20, str(v), size=12)

plt.text(i - 0.2, v - 75, str(round((v/count_projects)*100,2)) + '%', color='white', size=12)

# make y axis start at 0

plt.ylim(0, 1000)

plt.show()

There are 2203 projects in DAQA.

There are 465 (21.11%) projects with completion dates before 1940.

There are 608 (27.6%) projects with completion dates between 1940 and 1980.

There are 257 (11.67%) projects with completion dates after 1980.

There are 873 (39.63%) projects with no completion dates.

High-level summary of DAQA entities between 1940-1980#

We are particularly interested in data between 1940-1980. Similar to what we generated for the whole dataset, we output high-level statistics for data related to projects in this 1940-1980 period.

Show code cell source

# architects

# filter data accordingly

daqapersons_1940_80 = daqa_persons[daqa_persons['ori_id'].astype(int).isin(person_with_projects_1940_80)].copy()

print('Total number of persons with a related project between 1940-1980:', daqapersons_1940_80.shape[0])

architect_count = daqapersons_1940_80['longterm_roles'].value_counts().reset_index()

architect_count['Proportion'] = round(architect_count['longterm_roles']/architect_count['longterm_roles'].sum(),3)

architect_count['Type'] = np.where(architect_count['index'].str.contains('non-architect'), 'non-architect', 'architect')

display(architect_count\

.groupby('Type')\

.sum()\

.reset_index()\

.rename(columns={'longterm_roles':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

# firms

# filter data accordingly

daqaorgs_1940_80 = daqa_orgs[daqa_orgs['ori_id'].astype(int).isin(firms_with_projects_1940_80)].copy()

print('\nTotal number of organisations with a related project between 1940-1980:', daqaorgs_1940_80.shape[0])

firm_count = daqaorgs_1940_80['_class_ori'].value_counts().reset_index()

firm_count['Proportion'] = round(firm_count['_class_ori']/firm_count['_class_ori'].sum(),3)

display(firm_count\

.groupby('index')\

.sum()\

.reset_index()\

.rename(columns={'index':'Type', '_class_ori':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

# projects

# filter data accordingly

daqawork_1940_80 = daqa_work[daqa_work['_id'].isin(projects_1940_80)].copy()

print('\nTotal number of works between 1940-1980 i.e, projects:', daqawork_1940_80.shape[0])

project_count = daqawork_1940_80['_class_ori'].value_counts().reset_index()

project_count['Proportion'] = round(project_count['_class_ori']/project_count['_class_ori'].sum(),3)

display(project_count\

.groupby('index')\

.sum()\

.reset_index()\

.rename(columns={'index':'Type', '_class_ori':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

# articles

# filter data accordingly

daqaresources_1940_80 = daqa_resources[daqa_resources['ori_id'].astype(int).isin(resource_with_projects_1940_80)].copy()

print('\nTotal number of resources with a related project between 1940-1980 i.e., articles, interviews:', daqaresources_1940_80.shape[0])

article_count = daqaresources_1940_80['_class_ori'].value_counts().reset_index()

article_count['Proportion'] = round(article_count['_class_ori']/article_count['_class_ori'].sum(),3)

display(article_count\

.groupby('index')\

.sum()\

.reset_index()\

.rename(columns={'index':'Type', '_class_ori':'Frequency'})\

.set_index('Type')

.sort_values('Frequency', ascending=False))

Total number of persons with a related project between 1940-1980: 113

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| architect | 112 | 0.991 |

| non-architect | 1 | 0.009 |

Total number of organisations with a related project between 1940-1980: 101

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| "firm" | 101 | 1.0 |

Total number of works between 1940-1980 i.e, projects: 608

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| "structure" | 608 | 1.0 |

Total number of resources with a related project between 1940-1980 i.e., articles, interviews: 471

| Frequency | Proportion | |

|---|---|---|

| Type | ||

| "Photograph" | 288 | 0.611 |

| "LineDrawing" | 67 | 0.142 |

| "Image" | 62 | 0.132 |

| "article" | 47 | 0.100 |

| "interview" | 3 | 0.006 |

| "Portrait" | 2 | 0.004 |

| "publication" | 2 | 0.004 |

Show code cell source

relations_1940_1980 = []

for this_df in [daqapersons_1940_80,daqaorgs_1940_80,daqawork_1940_80,daqaresources_1940_80]:

for idx,row in this_df.iterrows():

for col in relationship_cols:

try:

if isinstance(row[col], str): relations_1940_1980.append(pd.json_normalize(ast.literal_eval(row[col])))

except: continue

relations_1940_1980 = pd.concat(relations_1940_1980)

relations_1940_1980 = relations_1940_1980.drop_duplicates()

relations_1940_1980['subject.ori_id'] = relations_1940_1980['subject.ori_id'].astype(str)

relations_1940_1980['object.ori_id'] = relations_1940_1980['object.ori_id'].astype(str)

relations_1940_1980['subject._class_ori'] = np.where(relations_1940_1980['subject._class'] == 'person',

relations_1940_1980['subject.ori_id'].map(arch_nonarch_dict),

relations_1940_1980['subject._class_ori'])

relations_1940_1980['object._class_ori'] = np.where(relations_1940_1980['object._class'] == 'person',

relations_1940_1980['object.ori_id'].map(arch_nonarch_dict),

relations_1940_1980['object._class_ori'])

# relations

print('Total number of relationships for entities related to 1940-1980 works:', relations_1940_1980.shape[0])

display(relations_1940_1980.relation_class.value_counts()\

.reset_index()\

.rename(columns={'index':'relation_class','relation_class':'Frequency'})\

.assign(Proportion = lambda x: round(x['Frequency']/x['Frequency'].sum(),3))\

.set_index('relation_class')

.sort_values('Frequency', ascending=False))

# predicate terms

print('\nTotal number of unique predicates for entities related to 1940-1980 works:', relations_1940_1980['predicate.term'].nunique())

display(relations_1940_1980['predicate.term'].value_counts()\

.reset_index()\

.rename(columns={'index':'predicate.term','predicate.term':'Frequency'})\

.assign(Proportion = lambda x: round(x['Frequency']/x['Frequency'].sum(),3))\

.set_index('predicate.term')

.sort_values('Frequency', ascending=False))

Total number of relationships for entities related to 1940-1980 works: 8399

| Frequency | Proportion | |

|---|---|---|

| relation_class | ||

| Work_RelatedResource | 2017 | 0.240 |

| Person_RelatedWork | 1472 | 0.175 |

| Person_RelatedPerson | 1218 | 0.145 |

| Organization_RelatedWork | 1091 | 0.130 |

| Person_RelatedOrganization | 903 | 0.108 |

| Work_RelatedPlace | 580 | 0.069 |

| Person_RelatedResource | 293 | 0.035 |

| Resource_RelatedPerson | 253 | 0.030 |

| Organization_RelatedOrganization | 183 | 0.022 |

| Work_RelatedOrganization | 168 | 0.020 |

| Resource_RelatedResource | 49 | 0.006 |

| Organization_RelatedResource | 43 | 0.005 |

| Resource_RelatedOrganization | 43 | 0.005 |

| Resource_RelatedWork | 38 | 0.005 |

| Person_RelatedRecognition | 22 | 0.003 |

| Work_RelatedPerson | 15 | 0.002 |

| Organization_RelatedPerson | 5 | 0.001 |

| Resource_RelatedRecognition | 3 | 0.000 |

| Resource_RelatedPlace | 2 | 0.000 |

| Person_RelatedPlace | 1 | 0.000 |

Total number of unique predicates for entities related to 1940-1980 works: 32

| Frequency | Proportion | |

|---|---|---|

| predicate.term | ||

| WorkedOn | 2303 | 0.274 |

| HasMedia | 2288 | 0.272 |

| Employment | 760 | 0.090 |

| Reference | 602 | 0.072 |

| LocatedIn | 580 | 0.069 |

| RelatedTo | 436 | 0.052 |

| TaughtBy | 245 | 0.029 |

| WorkedWith | 230 | 0.027 |

| InfluencedBy | 160 | 0.019 |

| StudiedWith | 110 | 0.013 |

| KnewSocially | 104 | 0.012 |

| PrecededBy | 93 | 0.011 |

| succeededby | 86 | 0.010 |

| KnewProfessionally | 85 | 0.010 |

| StudiedAt | 57 | 0.007 |

| PartnerOf | 54 | 0.006 |

| IsInvolvedIn | 40 | 0.005 |

| DesignedBy | 38 | 0.005 |

| KnewOf | 30 | 0.004 |

| CollaboratedWith | 19 | 0.002 |

| TravelledTo | 15 | 0.002 |

| ClientOf | 11 | 0.001 |

| WasInfluenceBy | 11 | 0.001 |

| MentoredBy | 10 | 0.001 |

| Founded | 7 | 0.001 |

| Became | 6 | 0.001 |

| Awarded | 5 | 0.001 |

| Read | 4 | 0.000 |

| Attended | 3 | 0.000 |

| TaughtAt | 3 | 0.000 |

| MergedWith | 2 | 0.000 |

| DoneIn | 2 | 0.000 |

Show code cell source

print('###################### PERSON RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Person_RelatedWork','Work_RelatedPerson'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Person_RelatedPerson'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Person_RelatedOrganization','Organization_RelatedPerson'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Person_RelatedResource','Resource_RelatedPerson'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Person_RelatedRecognition'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Person_RelatedPlace'], relations=relations_1940_1980)

print('\n')

print('###################### WORK RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Work_RelatedResource','Resource_RelatedWork'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Work_RelatedPlace'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Work_RelatedRecognition'], relations=relations_1940_1980)

print('\n')

print('###################### ORGANISATION RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Organization_RelatedWork','Work_RelatedOrganization'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Organization_RelatedOrganization'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Recognition_RelatedOrganization'])

print('###################### RESOURCE RELATIONSHIPS ######################')

print('\n')

fetch_relation_details(['Resource_RelatedResource'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Resource_RelatedOrganization','Organization_RelatedResource'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Resource_RelatedPlace'], relations=relations_1940_1980)

print('\n')

fetch_relation_details(['Resource_RelatedRecognition'], relations=relations_1940_1980)

print('\n')

###################### PERSON RELATIONSHIPS ######################

Total number of Person-Work relations: 1487

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | structure | WorkedOn | 1352 |

| 1 | architect | structure | Reference | 86 |

| 2 | architect | structure | InfluencedBy | 16 |

| 3 | structure | architect | DesignedBy | 14 |

| 4 | architect | structure | StudiedAt | 4 |

| 5 | architect | structure | Employment | 3 |

| 6 | architect | structure | TravelledTo | 3 |

| 7 | architect | structure | Attended | 2 |

| 8 | architect | structure | TaughtAt | 2 |

| 9 | non-architect | structure | Reference | 2 |

| 10 | architect | structure | ClientOf | 1 |

| 11 | architect | structure | KnewOf | 1 |

| 12 | structure | architect | ClientOf | 1 |

Total number of Person-Person relations: 1218

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | architect | TaughtBy | 232 |

| 1 | architect | architect | WorkedWith | 209 |

| 2 | architect | architect | Reference | 180 |

| 3 | architect | architect | InfluencedBy | 127 |

| 4 | architect | architect | StudiedWith | 100 |

| 5 | architect | architect | KnewSocially | 99 |

| 6 | architect | architect | Employment | 84 |

| 7 | architect | architect | KnewProfessionally | 71 |

| 8 | architect | architect | KnewOf | 26 |

| 9 | architect | non-architect | KnewProfessionally | 13 |

| 10 | architect | architect | MentoredBy | 10 |

| 11 | architect | architect | WasInfluenceBy | 9 |

| 13 | architect | non-architect | StudiedWith | 8 |

| 12 | architect | non-architect | Reference | 8 |

| 14 | architect | architect | CollaboratedWith | 5 |

| 15 | architect | architect | PartnerOf | 5 |

| 16 | architect | non-architect | KnewSocially | 5 |

| 17 | architect | non-architect | CollaboratedWith | 4 |

| 18 | architect | non-architect | WorkedWith | 4 |

| 19 | non-architect | architect | ClientOf | 4 |

| 20 | architect | non-architect | TaughtBy | 3 |

| 21 | architect | architect | ClientOf | 3 |

| 22 | architect | non-architect | InfluencedBy | 3 |

| 23 | architect | non-architect | Employment | 2 |

| 24 | architect | architect | RelatedTo | 2 |

| 25 | non-architect | architect | Employment | 1 |

| 26 | non-architect | architect | Reference | 1 |

Total number of Person-Organization relations: 908

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | firm | Employment | 652 |

| 1 | architect | education | StudiedAt | 53 |

| 2 | architect | firm | Reference | 49 |

| 3 | architect | firm | PartnerOf | 49 |

| 4 | architect | education | Employment | 15 |

| 5 | architect | firm | InfluencedBy | 11 |

| 6 | architect | firm | WorkedWith | 11 |

| 7 | architect | firm | TaughtBy | 10 |

| 8 | architect | firm | Founded | 7 |

| 9 | architect | firm | CollaboratedWith | 6 |

| 10 | architect | education | Reference | 5 |

| 11 | architect | education | Read | 3 |

| 12 | architect | education | InfluencedBy | 3 |

| 13 | architect | organisation | Became | 3 |

| 14 | architect | government | WorkedWith | 3 |

| 20 | architect | firm | StudiedWith | 2 |

| 19 | non-architect | firm | Reference | 2 |

| 18 | architect | organisation | Reference | 2 |

| 17 | firm | architect | KnewOf | 2 |

| 15 | architect | organisation | WorkedWith | 2 |

| 16 | architect | organisation | Employment | 2 |

| 29 | architect | firm | WasInfluenceBy | 1 |

| 35 | architect | education | ClientOf | 1 |

| 34 | architect | firm | KnewProfessionally | 1 |

| 33 | architect | organisation | Attended | 1 |

| 32 | architect | firm | KnewOf | 1 |

| 31 | architect | organisation | CollaboratedWith | 1 |

| 30 | architect | education | WorkedOn | 1 |

| 27 | architect | education | TaughtAt | 1 |

| 28 | architect | education | TravelledTo | 1 |

| 26 | firm | architect | Became | 1 |

| 25 | firm | architect | ClientOf | 1 |

| 24 | architect | education | CollaboratedWith | 1 |

| 23 | firm | architect | MergedWith | 1 |

| 22 | non-architect | firm | Employment | 1 |

| 21 | architect | organisation | RelatedTo | 1 |

| 36 | architect | education | Awarded | 1 |

Total number of Person-Resource relations: 546

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | architect | Reference | 176 |

| 1 | architect | Photograph | HasMedia | 107 |

| 2 | interview | architect | RelatedTo | 57 |

| 3 | architect | interview | RelatedTo | 55 |

| 4 | architect | Image | HasMedia | 49 |

| 5 | architect | interview | IsInvolvedIn | 39 |

| 6 | architect | Portrait | HasMedia | 23 |

| 7 | interview | non-architect | RelatedTo | 18 |

| 8 | non-architect | interview | RelatedTo | 18 |

| 9 | interview | non-architect | Reference | 2 |

| 10 | architect | publication | Reference | 1 |

| 11 | non-architect | interview | IsInvolvedIn | 1 |

Total number of Person-Recognition relations: 22

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | award | TravelledTo | 10 |

| 1 | architect | award | Awarded | 4 |

| 2 | architect | award | Reference | 4 |

| 3 | architect | award | Became | 2 |

| 4 | architect | award | Read | 1 |

| 5 | architect | award | WasInfluenceBy | 1 |

Total number of Person-Place relations: 1

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | architect | place | TravelledTo | 1 |

###################### WORK RELATIONSHIPS ######################

Total number of Work-Resource relations: 2055

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | structure | Photograph | HasMedia | 1477 |

| 1 | structure | LineDrawing | HasMedia | 397 |

| 2 | structure | Image | HasMedia | 141 |

| 3 | interview | structure | Reference | 38 |

| 4 | structure | Portrait | HasMedia | 2 |

Total number of Work-Place relations: 580

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | structure | place | LocatedIn | 580 |

Total number of Work-Recognition relations: 0

| subject._class_ori | object._class_ori | predicate.term | Frequency |

|---|

###################### ORGANISATION RELATIONSHIPS ######################

Total number of Organization-Work relations: 1259

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | firm | structure | WorkedOn | 950 |

| 1 | structure | firm | RelatedTo | 144 |

| 2 | firm | structure | RelatedTo | 141 |

| 3 | structure | firm | DesignedBy | 24 |

Total number of Organization-Organization relations: 183

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | firm | firm | PrecededBy | 93 |

| 1 | firm | firm | succeededby | 86 |

| 2 | firm | firm | CollaboratedWith | 2 |

| 3 | firm | firm | MergedWith | 1 |

| 4 | firm | firm | WorkedWith | 1 |

Total number of Recognition-Organization relations: 1

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | award | education | StudiedAt | 1 |

###################### RESOURCE RELATIONSHIPS ######################

Total number of Resource-Resource relations: 49

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | article | Article | HasMedia | 36 |

| 1 | interview | Audio | HasMedia | 6 |

| 2 | interview | Transcript | HasMedia | 5 |

| 3 | interview | Youtube | HasMedia | 2 |

Total number of Resource-Organization relations: 86

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | firm | Reference | 41 |

| 1 | firm | Photograph | HasMedia | 31 |

| 2 | firm | Portrait | HasMedia | 9 |

| 3 | firm | LineDrawing | HasMedia | 2 |

| 4 | firm | Image | HasMedia | 1 |

| 5 | interview | education | Reference | 1 |

| 6 | interview | organisation | Reference | 1 |

Total number of Resource-Place relations: 2

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | place | DoneIn | 2 |

Total number of Resource-Recognition relations: 3

| subject._class_ori | object._class_ori | predicate.term | Frequency | |

|---|---|---|---|---|

| 0 | interview | award | Reference | 3 |

1940-1980 projects and firms#

Below are some statistics about project characteristics and firms in the DAQA dataset of only projected completed between 1940 and 1980. Proportions under the 1940-1980 PROJECTS subheading are calculated as a percentage of the total number of projects completed between 1940 and 1980 in the dataset. Proportions under the 1940-1980 FIRMS subheading are calculated as a percentage of the total number of firms related to projects completed between 1940 and 1980 in the dataset.

We define an extant project as one that has a populated

is_demolishedfield and the value isFalseWe define a demolished project as one that has a populated

is_demolishedfield and the value isTrue

Show code cell source

print('###################### 1940-1980 PROJECTS ######################')

# filter data accordingly

daqawork_1940_80 = daqa_work[daqa_work['_id'].isin(projects_1940_80)].copy()

print('\nQ: How many projects are recorded in DAQA between 1940-1980?')

count_projects = len(daqawork_1940_80)

print(f'A: There are {count_projects} projects between 1940-1980.')

# we define a extant buiulding where "is_demolished" field is False

print('\nQ: what % of projects completed in 1940-1980 are extant?')

count_projects_extant = len(daqawork_1940_80[daqawork_1940_80['is_demolished'] == False])

prop_projects_extant = round((count_projects_extant / count_projects) * 100, 2)

print(f'A: {prop_projects_extant}% ({count_projects_extant}) of DAQA projects between 1940-1980 are extant.')

# we define a extant buiulding where "is_demolished" field is True

print('\nQ: what % of projects completed in 1940-1980 are demolished?')

count_projects_demolished = len(daqawork_1940_80[daqawork_1940_80['is_demolished'] == True])

prop_projects_demolished = round((count_projects_demolished / count_projects) * 100, 2)

print(f'A: {prop_projects_demolished}% ({count_projects_demolished}) of DAQA projects between 1940-1980 are demolished.')

print('\n*It should be noted that there are 5 DAQA projects between 1940-1980 with missing demolished information.')

# we define a project with an address as one that has a populated "address" field

print('\nQ: what % of projects between 1940-1980 have addresses?')

count_projects_with_address = len(daqawork_1940_80[daqawork_1940_80.coverage_range.apply(lambda x: "address" in x)])

prop_projects_with_address = round((count_projects_with_address / count_projects) * 100, 2)

print(f'A: {prop_projects_with_address}% ({count_projects_with_address}) of DAQA projects between 1940-1980 have addresses.')

# we define a project with a geocode date as one that has a populated "longitude" field

print('\nQ: what % of projects between 1940-1980 have geocodes (lat/long)?')

count_projects_with_geocodes = len(daqawork_1940_80[daqawork_1940_80.coverage_range.apply(lambda x: "latitude" in x)])

prop_projects_with_geocodes = round((count_projects_with_geocodes / count_projects) * 100, 2)

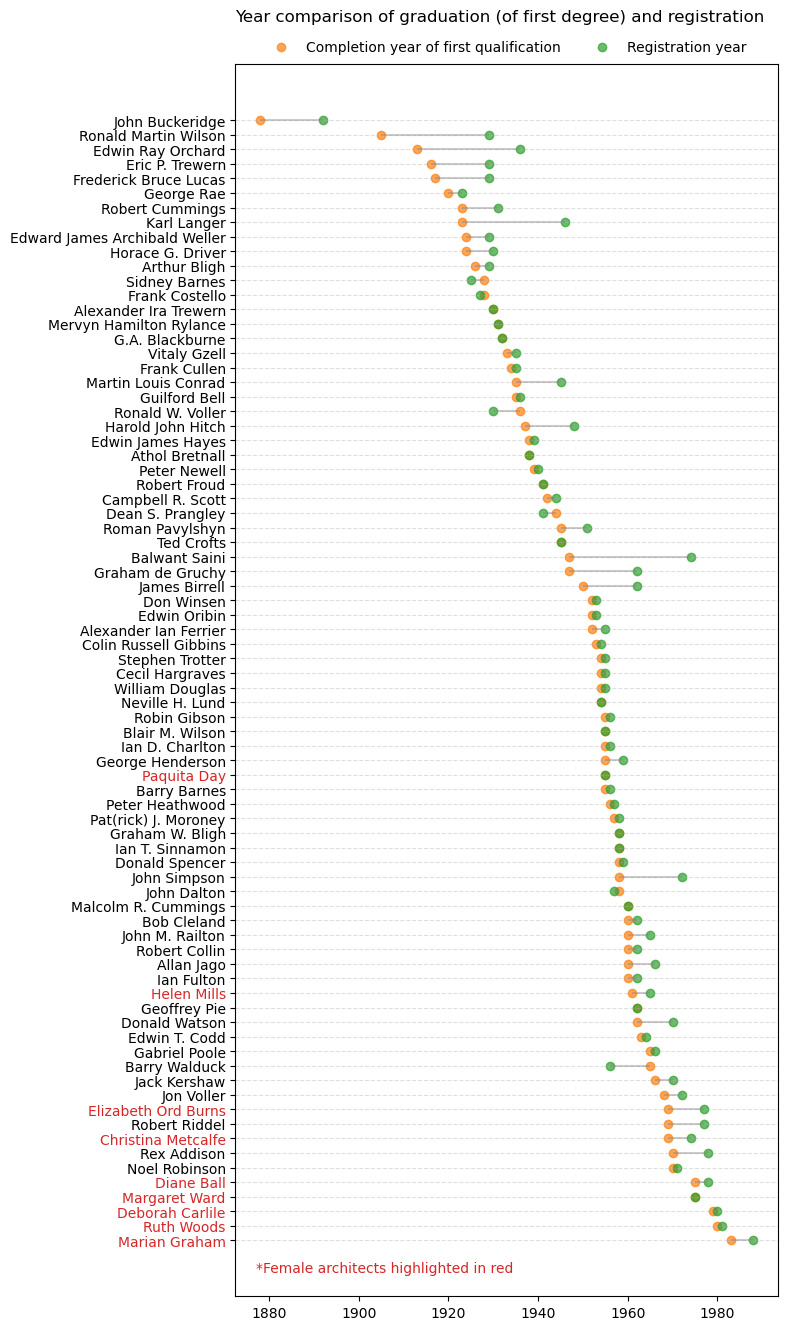

print(f'A: {prop_projects_with_geocodes}% ({count_projects_with_geocodes}) of DAQA projects between 1940-1980 have geocodes.')